Standing up any new analytics tool in an organization is complex, and early on, new adopters of Tableau often struggle to include all the complexities in their plan. This post proposes a mental model in the form of a story of how Tableau might have rolled out in one hypothetical installation to uncover common challenges for new adopters.

Standing up any new analytics tool in an organization is complex, and early on, new adopters of Tableau often struggle to include all the complexities in their plan. This post proposes a mental model in the form of a story of how Tableau might have rolled out in one hypothetical installation to uncover common challenges for new adopters.

Tableau’s marketing lends one to imagine that introducing Tableau is easy: “Fast Analytics”, “Ease of Use”, “Big Data, Any Data” and so on. (here, 3/31/2017). Tableau’s position in Gartner’s Magic Quadrant (referenced on the same page) attests to the huge upside for organizations that successfully deploy Tableau, which I’ve been lucky enough to witness firsthand. Continue reading

As I mentioned in the

As I mentioned in the

ta science or warehousing context, which is often where quality problems

ta science or warehousing context, which is often where quality problems

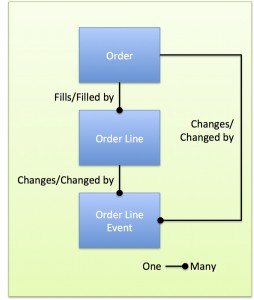

As important as it is, data modeling has always had a geeky, faintly impractical tinge to some. I’ve seen application development projects proceed with a suboptimal, “good enough”, model. The resulting systems might otherwise be well-architected, but sometimes strange vulnerabilities emerge that track directly to data design flaws.

As important as it is, data modeling has always had a geeky, faintly impractical tinge to some. I’ve seen application development projects proceed with a suboptimal, “good enough”, model. The resulting systems might otherwise be well-architected, but sometimes strange vulnerabilities emerge that track directly to data design flaws.