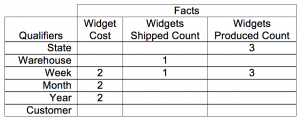

At the very first TDWI Conference, Duane Hufford described a phenomenon he called “embedded data”, now more commonly called “overloaded data”, where two or more concepts are stuffed into a single data field (“Metadata Repositories,” TDWI Conference 1995). He described and portrayed in graphics three types of overloaded data. Almost 20 years later, overloaded data remains rampant but Mr Hufford’s ideas, presented below with updated examples, are unfortunately not widely discussed.

At the very first TDWI Conference, Duane Hufford described a phenomenon he called “embedded data”, now more commonly called “overloaded data”, where two or more concepts are stuffed into a single data field (“Metadata Repositories,” TDWI Conference 1995). He described and portrayed in graphics three types of overloaded data. Almost 20 years later, overloaded data remains rampant but Mr Hufford’s ideas, presented below with updated examples, are unfortunately not widely discussed.

[Note: in March of 2021 I added one further category, bundling. – BL]

Overloaded data breeds in areas not exposed to sound data management techniques for one reason or the other. Big data acquisition typically loads data uncleansed, shifting the burden of unpacking overloaded fields to the receiver (pity the poor data scientist spending 70% of her time acquiring and cleaning data!)

One might refer to non-overloaded data as “atomic”. Beyond making data harder to use, overloaded data requires more code to manage than atomic data (see why in the sections below) so by extension it increases IT costs.

Here’s a field guide to three different types of overloaded data, associated risks, and how to avoid them: Continue reading →

Yesterday I had the pleasure of presenting “The Business End of Data Modeling” for the Lynchburg SQL Server User’s Group. It was a great time, thanks for having me out!

Yesterday I had the pleasure of presenting “The Business End of Data Modeling” for the Lynchburg SQL Server User’s Group. It was a great time, thanks for having me out!