Modern data architectures, by enabling data analytics insights, promise to drive order of magnitude value gains across many business sectors (here, here, and here). Not so long ago, big data presented a daunting challenge. Although tools were plentiful, we struggled to conceptualize the architecture and organization within which to capitalize on those tools. Now solid frameworks have emerged. This post reviews two promising models for modern data architecture, and discusses two key cultural values critical to their successful adoption: drive to solve business challenges and drive for universal data correctness. Continue reading

Modern data architectures, by enabling data analytics insights, promise to drive order of magnitude value gains across many business sectors (here, here, and here). Not so long ago, big data presented a daunting challenge. Although tools were plentiful, we struggled to conceptualize the architecture and organization within which to capitalize on those tools. Now solid frameworks have emerged. This post reviews two promising models for modern data architecture, and discusses two key cultural values critical to their successful adoption: drive to solve business challenges and drive for universal data correctness. Continue reading

Category Archives: Data Science

Fixing Tableau Desktop Blue Screen or Unresponsive

Tableau desktop (10.2.2 on Windows 7 at work) was consistently locking up my computer or causing a BSOD when I tried to start it. After struggling for a while trying to solve the problem, I found out it was because it used all resources when opening the log file, which had over time grown to 24gig. Apparently my version of Tableau desktop doesn’t periodically clean up the log files.

Tableau desktop (10.2.2 on Windows 7 at work) was consistently locking up my computer or causing a BSOD when I tried to start it. After struggling for a while trying to solve the problem, I found out it was because it used all resources when opening the log file, which had over time grown to 24gig. Apparently my version of Tableau desktop doesn’t periodically clean up the log files.

However, if the …/Logs folder isn’t there at Tableau startup, it just builds a new one and starts fresh, so whenever Tableau isn’t running you can just delete it. So, to make that happen automatically, I’ve added a batch file with these commands to my startup folder: Continue reading

Leader’s Data Manifesto Annual Review: “It’s About the Lopez Women”

A year ago I recounted proceedings from the 2017 EDW World conference, which included release of the Leader’s Data Manifesto (LDM). Last week’s EDW World 2018 served as a one-year status report on the Manifesto. The verdict: there’s still a long way to go, but speakers and attendees report dramatic progress and emergence of shared values supporting data management’s role in enabling success and reducing risk.

A year ago I recounted proceedings from the 2017 EDW World conference, which included release of the Leader’s Data Manifesto (LDM). Last week’s EDW World 2018 served as a one-year status report on the Manifesto. The verdict: there’s still a long way to go, but speakers and attendees report dramatic progress and emergence of shared values supporting data management’s role in enabling success and reducing risk.

To me the most compelling example of progress was the story of the Lopez women, told by Tommie Lawrence, who leads patient data quality efforts at Sharp Healthcare, a major San Diego, Ca, healthcare network. Ms. Lawrence’s team is responsible for data quality related to about six million patient records in the 40 highest priority of Sharp’s ~400 systems containing Patient Health Information (PHI).

A few years ago, Sharp Healthcare had two patients named Maria Lopez*, with birthdays one day apart. One suffered from kidney disease, the other had cancer. After a long wait a kidney was found, and the hospital called the Maria with kidney disease and asked her to come to the hospital for a transplant immediately. During operation prep, an assistant noticed that Maria had cancer, and put a halt to proceedings – it didn’t make sense to give the kidney to someone with cancer. Continue reading

Escaping Teradata Purgatory (Select Failed. [2646] No more spool space)

Also see the related post More on “Select Failed. [2646] No more spool space”

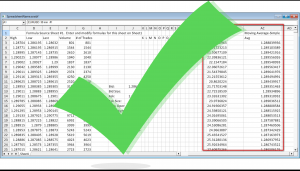

If you are a SQL developer or data analyst working with Teradata, it is likely you’ve gotten this error message: “Select Failed. [2646] No more spool space”. Roughly speaking, Teradata “spool” is the space DBAs assign to each user account as work space for queries. So, for example, if your query needs to build an intermediate table behind the scenes to sort or otherwise process before it hands over your result set, that happens in spool space. It is limited, in part, to keep your potentially runaway query from using up too much space and clogging up the system.

If you are a SQL developer or data analyst working with Teradata, it is likely you’ve gotten this error message: “Select Failed. [2646] No more spool space”. Roughly speaking, Teradata “spool” is the space DBAs assign to each user account as work space for queries. So, for example, if your query needs to build an intermediate table behind the scenes to sort or otherwise process before it hands over your result set, that happens in spool space. It is limited, in part, to keep your potentially runaway query from using up too much space and clogging up the system.

After briefly setting the stage, this post presents the top three tactics I use to avoid or overcome spool space errors. For the second two tactics I’ll show working code. At the end of the post you’ll find volatile DDL that you can use to get the queries to run. Continue reading

The Practical Metadata Business Case

Even now the business case for a metadata tool seems unclear and difficult to quantify, but it isn’t impossible.

Even now the business case for a metadata tool seems unclear and difficult to quantify, but it isn’t impossible.

We in the data management business tend to devalue solutions that don’t clearly derive from a coherent top-level view. We seek applications defined from an enterprise architecture, database designs from an enterprise data model, and data elements consistent with the enterprise business glossary.

However, sometimes tactical gains make sense even when the big picture is missing, and tactical successes of metadata for analytics teams can raise consciousness that helps set the stage for evolving data management improvements. Continue reading

Data Quality, Evolved

Data quality doesn’t have to be a train wreck. Increased regulatory  scrutiny, NoSQL performance gains, and the needs of data scientists are quietly changing views and approaches toward data quality. The result: a pathway to optimism and data quality improvement.

scrutiny, NoSQL performance gains, and the needs of data scientists are quietly changing views and approaches toward data quality. The result: a pathway to optimism and data quality improvement.

Here’s how you can get on the new and improved data quality train in each of those three areas: Continue reading

A New Direction for Data at #EDW17

Obviously, data management is important. Unfortunately, it is not prioritized in most organizations. Those that effectively manage data perform far better than organizations that don’t. Everyone who needs data to do his/her job must drive change to improve data management.

Obviously, data management is important. Unfortunately, it is not prioritized in most organizations. Those that effectively manage data perform far better than organizations that don’t. Everyone who needs data to do his/her job must drive change to improve data management.

That was the theme of the recent Enterprise Data World (EDWorld) conference this week. This year’s EDWorld event might be the start of a new vitality and influence for the field, marked by introduction of a Leader’s Data Manifesto.

Over the years, data practitioners struggled for recognition and resources within their organizations. In reaction, they often focused on data “train wrecks” that this neglect causes. This year’s conference was no exception. For example: Continue reading

Tableau Rollout Across Five Dimensions

Standing up any new analytics tool in an organization is complex, and early on, new adopters of Tableau often struggle to include all the complexities in their plan. This post proposes a mental model in the form of a story of how Tableau might have rolled out in one hypothetical installation to uncover common challenges for new adopters.

Standing up any new analytics tool in an organization is complex, and early on, new adopters of Tableau often struggle to include all the complexities in their plan. This post proposes a mental model in the form of a story of how Tableau might have rolled out in one hypothetical installation to uncover common challenges for new adopters.

Tableau’s marketing lends one to imagine that introducing Tableau is easy: “Fast Analytics”, “Ease of Use”, “Big Data, Any Data” and so on. (here, 3/31/2017). Tableau’s position in Gartner’s Magic Quadrant (referenced on the same page) attests to the huge upside for organizations that successfully deploy Tableau, which I’ve been lucky enough to witness firsthand. Continue reading

Analytics Requirements: Avoid a Y2.xK Crisis

Ev en though it happens annually, teams building new visualizations often forget to think about the effects of turning over from one year to another.

en though it happens annually, teams building new visualizations often forget to think about the effects of turning over from one year to another.

In today’s fast paced, Agile world, requirements for even the most critical dashboards and visualizations tend to evolve, and development often proceeds iteratively from a scratchpad sketch through successively more detailed versions to release of a “1.0” production version. Organized analytics teams evolve dashboards within a process framework that include checkpoints ensuring standards are met for security, reliability, usability, and so on.

A reporting team can build a revolutionary analytics capability enabling unprecedented visibility into operations, and then, if year turnover isn’t included in requirements, experience embarrassing errors and usability challenges in the January after initial deployment. In effect, the system experiences its own Y2.xK crisis, not too different from the expected Y2K crisis 16 years ago. Continue reading

Tableau Startup: First Lessons Learned

As I mentioned in the February post, I’m new to Tableau, and as the tone of that post implied,enjoying it very much. Tableau is a robust and flexible solution for data delivery. Like Qlikview, which I worked with a while ago, it is supported by outstanding, and free, introductory training and a very active user community.

As I mentioned in the February post, I’m new to Tableau, and as the tone of that post implied,enjoying it very much. Tableau is a robust and flexible solution for data delivery. Like Qlikview, which I worked with a while ago, it is supported by outstanding, and free, introductory training and a very active user community.

As I’ve made my first steps in Tableau I’ve been a frequent user community visitor, and generally have gotten the answers I’ve been looking for. However, like any tool there still have been a few surprises. I’ll run down the top few in this post:

- Measures can have complex logic

- Big extracts are tricky

- Changing data sources is really tricky

- Sorry, there are some things you just can’t do

Hopefully this post helps other novices negotiate those first few steps a bit more easily. Continue reading