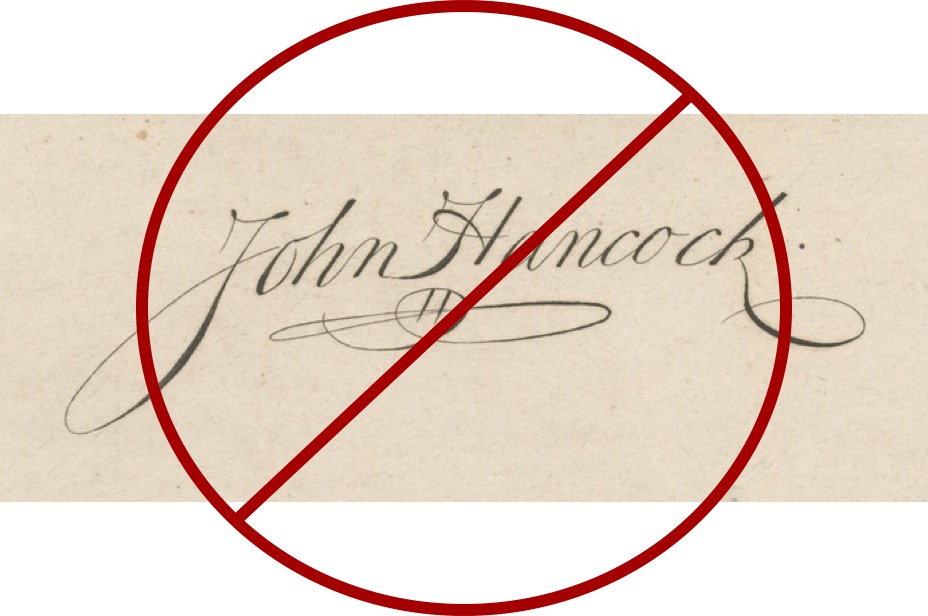

Although Agile writers and thinkers agree that “there is no sign-off” in Agile methodology, the practice of requiring product owners and business customers to sign off on requirements and delivered work products persists in Agile settings. I’ve seen it most when an agile team faces delivery challenges and leaders perceive the problem is scope creep or failure of the UAT process before delivery. In those situations, adding a formal sign-off provides an illusion of a stronger process but does nothing to resolve the underlying issue.

Although Agile writers and thinkers agree that “there is no sign-off” in Agile methodology, the practice of requiring product owners and business customers to sign off on requirements and delivered work products persists in Agile settings. I’ve seen it most when an agile team faces delivery challenges and leaders perceive the problem is scope creep or failure of the UAT process before delivery. In those situations, adding a formal sign-off provides an illusion of a stronger process but does nothing to resolve the underlying issue.

Sure, sometimes sign-off is necessary, especially when two or more separate organizations work together on a project. For example, consulting contracts often require sign-off on interim and final work products. However, addition of a sign-off step is common within organizations in hopes of a remedy for delivery or quality challenges.

The commenter David on this post says that “the purpose of a sign-off (or whatever you wanna call it) is a confirmation from a product owner that artifact A is fine for the time being, and can be used as basis for work on artifact B.” That’s all well and good, but in a well-run Agile context sign-off is a meaningless formality that’s dispensed with because it’s unnecessary.

How could that be? Others have written, often emphatically, on why sign-off is unnecessary in an agile context, including here and here. This quick video explains how “definition of done” and a fully committed, reliable team work together together make sign-off irrelevant. Continue reading →

A while back I wrote the post

A while back I wrote the post

Although Agile writers and thinkers agree that “

Although Agile writers and thinkers agree that “ Frequently in my career I’ve selected or helped select ETL and reporting professionals who need SQL skills. For some of those opportunities, placement firms returned resumes with interminable, and nearly identical, lists of technical achievements with excruciating unnecessary detail (paraphrasing: “Wrote SELECT statements using GROUP BY”, “Applied both inner and outer joins”). Before interviewing we typically ranked candidates in order of preference based on resumes. Candidates’ interview success bore little relation to resume-based rankings.

Frequently in my career I’ve selected or helped select ETL and reporting professionals who need SQL skills. For some of those opportunities, placement firms returned resumes with interminable, and nearly identical, lists of technical achievements with excruciating unnecessary detail (paraphrasing: “Wrote SELECT statements using GROUP BY”, “Applied both inner and outer joins”). Before interviewing we typically ranked candidates in order of preference based on resumes. Candidates’ interview success bore little relation to resume-based rankings.

Sometimes success seems like a data analytics team’s worst enemy. A few successful visualizations packaged up into a dashboard by a small skunkworks team can generate interest such that a year later the team has published scores of mission critical dashboards. As their use spreads throughout the organization, and as features expand to meet the needs of an expanding user base, the dashboards can slow down and data refreshes fail as they exceed

Sometimes success seems like a data analytics team’s worst enemy. A few successful visualizations packaged up into a dashboard by a small skunkworks team can generate interest such that a year later the team has published scores of mission critical dashboards. As their use spreads throughout the organization, and as features expand to meet the needs of an expanding user base, the dashboards can slow down and data refreshes fail as they exceed  In our current “social distancing” situation, many are working remotely in a serious way for the first time. As one who’s worked full time from home for the past four years, and frequently before that, I thought I should share some tips based on experience. Below are my top three tips and then some of the gear that I’ve used to set up a comfortable workspace.

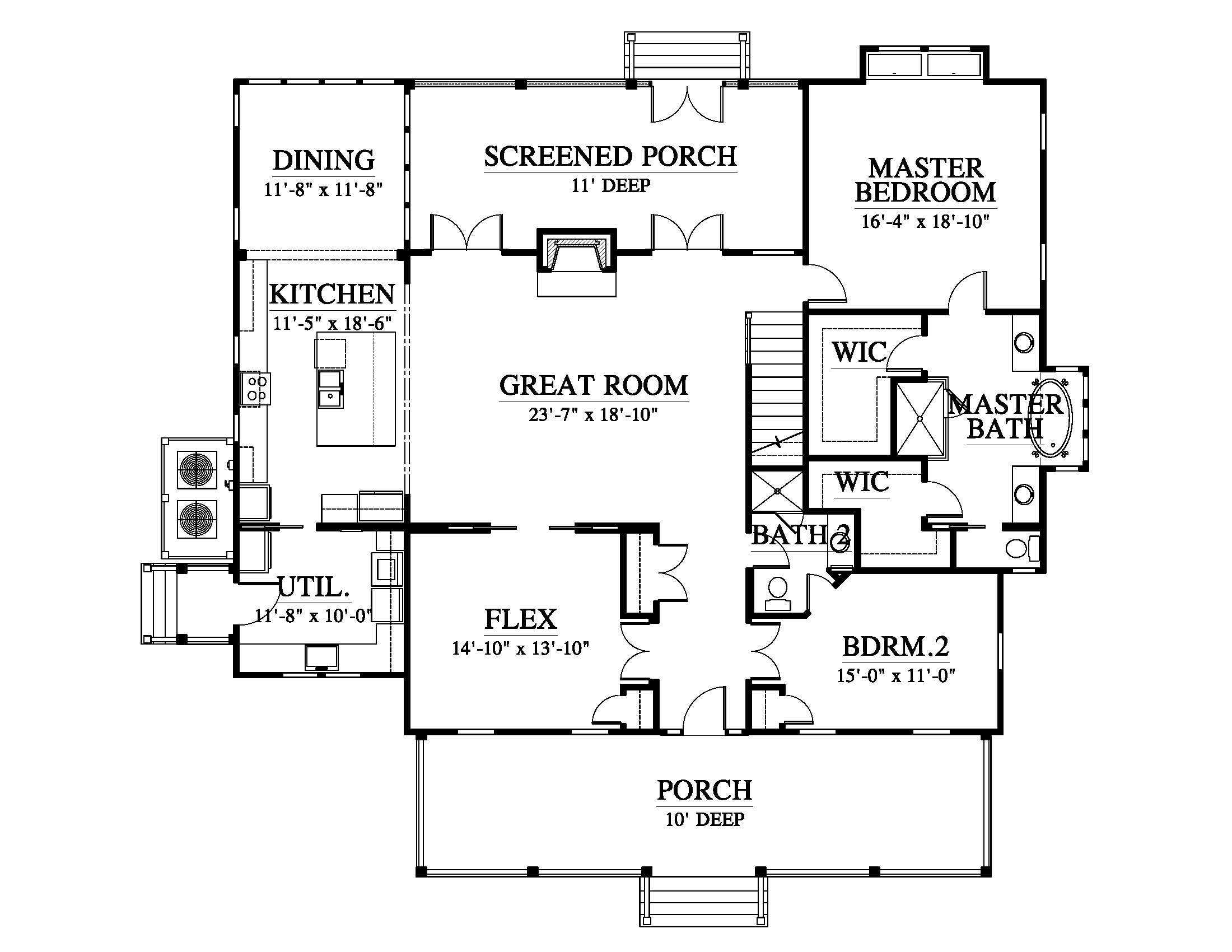

In our current “social distancing” situation, many are working remotely in a serious way for the first time. As one who’s worked full time from home for the past four years, and frequently before that, I thought I should share some tips based on experience. Below are my top three tips and then some of the gear that I’ve used to set up a comfortable workspace. It’s not unusual for talented teams of business analysts to find themselves maintaining significant inventories of Tableau dashboards. In addition to sound development practices, following two key principles in data source design help these teams spend less time in maintenance and focus more on building new visualizations: publishing Tableau data sources separately from workbooks and waiting until the last opportunity to join dimension and fact data.

It’s not unusual for talented teams of business analysts to find themselves maintaining significant inventories of Tableau dashboards. In addition to sound development practices, following two key principles in data source design help these teams spend less time in maintenance and focus more on building new visualizations: publishing Tableau data sources separately from workbooks and waiting until the last opportunity to join dimension and fact data. Not too long ago I

Not too long ago I